2025-01-03

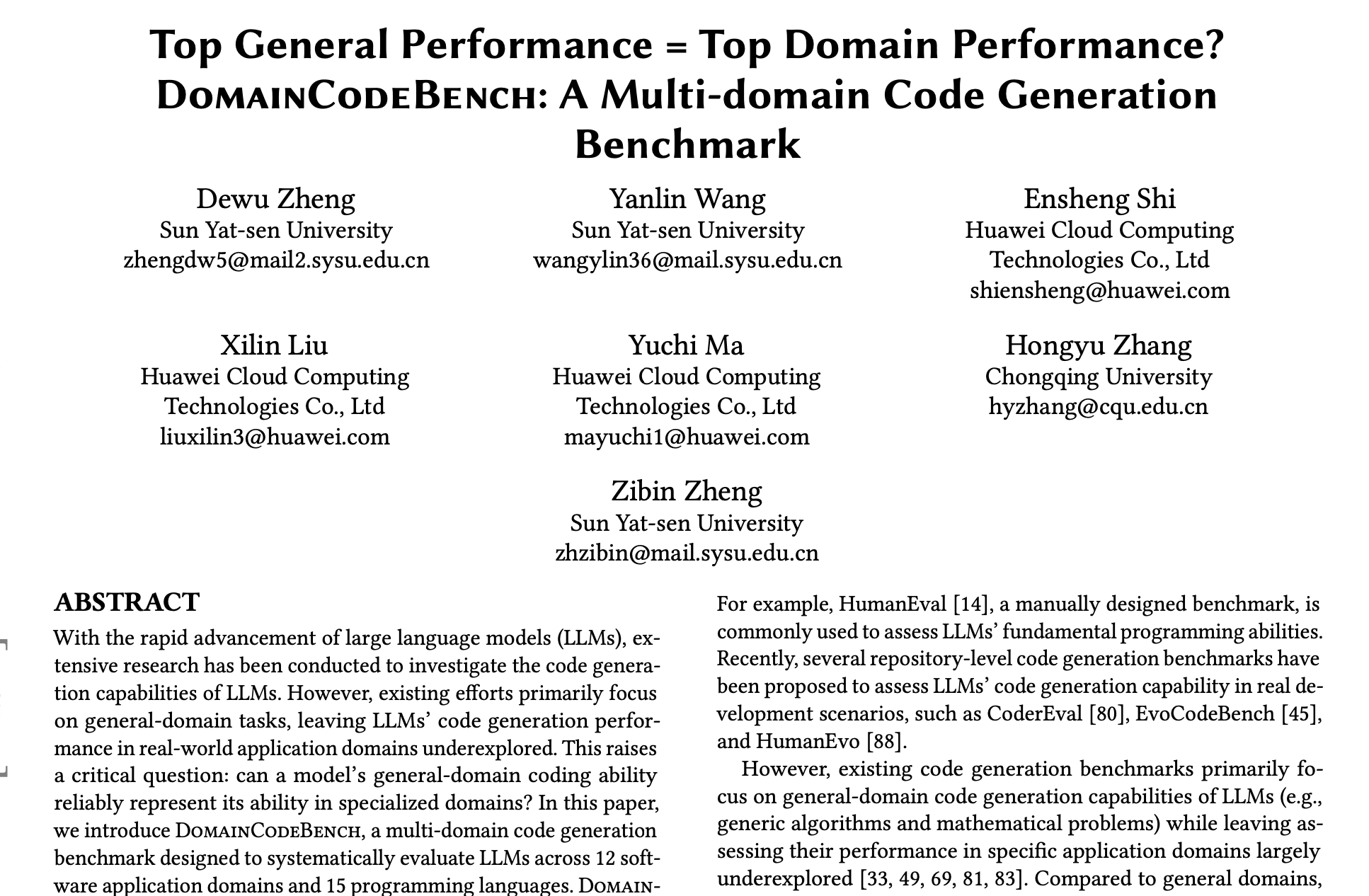

Which Model for What Code: In Graphs#

I recently stumbled across the MultiCodeBench paper. The premise had me genuinely excited: they're testing whether LLMs that ace general coding benchmarks (like HumanEval) are just as good at domain-specific tasks. Spoiler: they aren't.

In this post:

- Quick reference graphs

- Some interesting patterns

- My quibbles with the paper

Domain-specific evals is a really awesome idea. Most of the coding assistants out there (Cursor, Windsurf, etc) let you choose the model - so knowing which to use for different projects? Having actual data on what to use for web dev vs data analytics? Tremendously useful. I was excited to see the results.

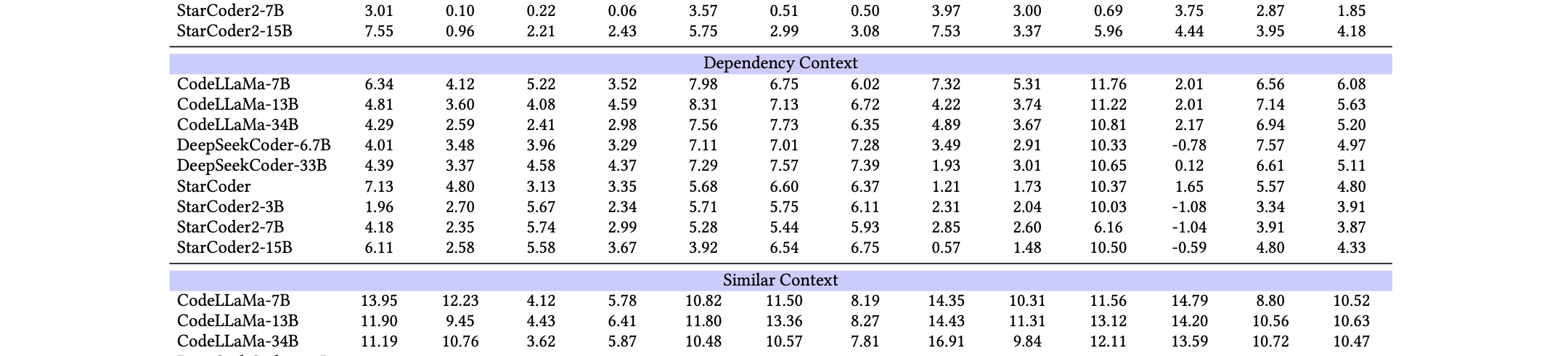

Tables. So many tables.

I was hoping for a quick reference to help me choose the right model for the right task. Unfortunately, the paper mostly gives us a bunch of dense tables - the information is there, but you need to dig. I've generally forgotten the first model's score by the time I get to the tenth, so it becomes a frustrating exercise in cross-referencing.[1]

So I created an eval cheat sheet.

The first graph is the most useful if you want a quick reference for practical application; the next two are more interesting for visualizing the interesting patterns the paper found.

Aforementioned Interesting Patterns#

Once I got these visualizations up and running, some interesting patterns jumped out:

-

Everyone smashes blockchain but flops on web dev. This pattern is consistent across nearly every model tested. I would've assumed there's much more web dev pre-training data available than blockchain code, so this result is counterintuitive. It makes me wonder if there's something about the structure of blockchain problems that makes them more amenable to LLM solutions, or if the scoring methodology systematically favors certain domains.

-

Your model's last name matters more than its size. Look at robotics, for instance: DeepSeekCoder models beat CodeLLaMA models, which beat StarCoder models, pretty much regardless of how many parameters they're lugging around. GPT-4 sits surprisingly middle-of-the-pack in many domains despite crushing general coding benchmarks. Family resemblance is stronger than weight class.

-

No patterns between related domains. I went hunting for correlations between conceptually related domains (like mobile and web), but came up empty-handed. Each domain seems to be its own special snowflake of challenges. However, possibly I didn't look hard enough.

Can we trust this benchmark?#

Benchmarks are hard. Especially with LLMs, which are so easily swayed by the slightest change in prompt, and come with so much preknowledge. This one's no exception.

- Prompts: The general prompt structure here doesn't really match how we use LLMs in the wild. The docstring approach gives a level of structure and constraining that the vibecoder zeitgeist is moving away from - most of us give user-story prompts describing the effects we want instead getting into spec details. I worry that the benchmark results won't match real-world usage.

- Data leakage: They're pulling problems from the most starred GitHub repos. That's pretty much a guarantee the code already exists in the training datasets. Even with rewritten docstrings, this raises questions about whether we're testing memorization or generation.

These are understandable - they let the authors automate the benchmark setup, so they can cover a lot more ground. Everything's always a tradeoff between quantity and quality, and this is a fair approach at balance.

That said, it's still important to keep in mind. For personal practical application, it's still most useful to start building your own internal set of evals that match your general requirements prompting style.

Benchmarks are always a useful start, but shouldn't be the final word.